weeknotes: dovekie, jaunty robins

Dovekie

I’m continuing to iterate on Dovekie with the feedback from an undergraduate researcher! I added the automatic MurreletGUI to it, so it generates the input boxes automatically based on the inferred schema. It infers a schema the first time you call it (or you can enter overrides, although I’m about to rewrite that part).

I also added a way to add custom variables. I tested it to add in audio signals (using some code generated from the AI chatbots) and got it working pretty fast.

I also started working on the README.

One interesting thing is that while Murrelet uses Rust derive macros on its structs and enums, Dovekie fakes it by creating fake struct types. e.g. {a: {b: 2}, c: 3} could be happily stored in a struct like { {key: a, value: {key: b, value: 2}}, {c: 3}}. That has a few problems, but the most pressing was that I wouldn’t be able to re-use the Murrelet-GUI code that automatically generates an interface for the schema.

So I introduced a level of abstraction: a little schema type that can be derived from either the Rust Macro or the Dovekie type. So far, MurreletGUI is the only thing that uses it, but I’m wondering about switching over some other features so I can have less code in my macros.

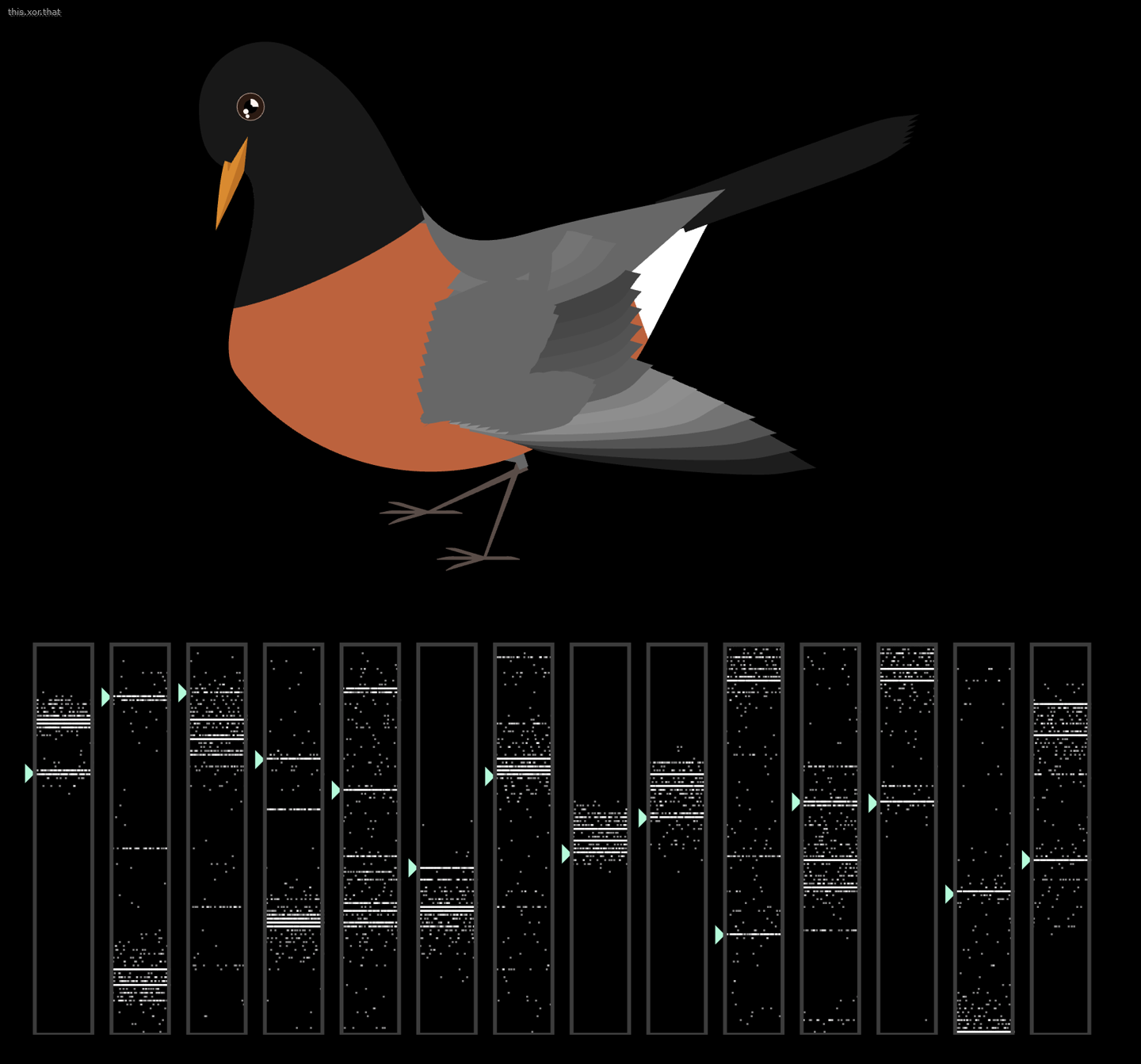

evolving jaunty robins

I’m continuing to wire up the bird parameters to machine learning!

I added a view that shows the bird with parameter sliders and the automatic checks. I mark the valid parameters, which, even though it’s just the sum over all the other dimensions (whee, marginal), gives a nice visualization of what areas the model spends more time in. It’s interactive, so it’s also nice to try different values that the model might not be exploring that you think should work, which then seeds the model.

I also have a way to provide user feedback about the robins, which the model also learns, and I can prioritize those robins. It tires me out to label every single robin. It works out better to just highlight the robins that the model predicts will have a low score, so that you can correct those if they’re incorrect, as well as the funky-looking birds that it doesn’t score low enough, or maybe I should make one of those swiping apps for these?

I also started playing with using the learned model to find valid robins that are, say, looking in a certain direction. It’s like, I have the model learn f(param) = bird is looking that way, and then I can flip it around and say “give me parameters where the bird is looking that way.” It reminds me of the tricks of the 2010s neural style transfer, where you take the trained neural network and then use it to optimize the image pixels in a certain way.

anisotropic lace

I felt the need to make something other than jaunty robins, so I got out anisotropic lace and made a weave. The version I liked the best was when it started bugging out and removing some intersections. Maybe it’s time to make that possible to modify!